Personal, as well as business and even policy decisions are increasingly made by algorithms.

And an algorithm with almost no human involved can’t be “biased,” can it?

Unfortunately, it is.

There are certain assumptions underlying every algorithm.

Algorithms are highly dependent on these.

The insights and ideas of the model’s designer end up having a huge impact on the outcomes.In this blog, we will discuss specifically one consequence of this, namely: gender bias.

What is it exactly?

Gender bias in data is a bias (a bias in the result due to external factors) specifically on gender.

Many algorithms are based on data where a representative group has not been used.

Regularly, for example, there is a lack of female input.

So this can give biased or one-sided results.

But what if important decisions are made based on the output?

Gender data bias is visible in practice, but also occurs “hidden” in machine learning, for example.

Examples of gender data bias in practice

- When women are involved in a car accident, they are 47% more likely to be seriously injured than men, 71% more likely to be moderately injured.

Also, a woman is 17% more likely to die in a car accident than a man[2].

Why this is?

Women, on average, sit more forward, are shorter, and therefore need to sit more upright to have more visibility.

That this all happens is due to the fact that cars are designed using crash test dummies based on the average man.

In 2011, the first female crash test dummies were taken into production in the United States. - An algorithm to automatically select top talent from a large number of resumes was introduced by Amazon in 2014.

Promisingly, it found that resumes containing the word “women’s” were rated lower.

Even identical resumes with the only difference being male-female were rated differently.

This was because the model was trained on resumes from 10 years back in the tech sector.

Because of the underrepresentation of women in this sector, the training data consisted mostly of men’s resumes. - It is also seen in the medical community that heart attacks are less likely to be recognized in women than in men, and that drugs are more likely to fail simply because they are tested on a group in which the majority are men[6].

The above examples have a similar cause.

The training data of the algorithms is not representative of the entire population, and in these cases, this produces serious, and sometimes even dangerous, consequences.

Gender data bias in AI and Data Science

Gender bias can also occur in machine learning models.

But also in self-learning algorithms, which would be expected to be neutral.

If there is already a bias in the data that is put into the algorithm, it may not only be reflected in the output of the model.

It can itself be magnified.

Some of the techniques and methods where this occurs within data science and/or AI are highlighted below.

Image Regognition[4]

Image recognition models provide the ability to learn from images without needing specific knowledge.

In image recognition software, which are also used by major providers, stereotypes clearly appear.

Images of shopping and laundry are linked to women; coaching (of sports) and shooting to men.

Also, men standing in kitchens are more often labeled as women by this software.

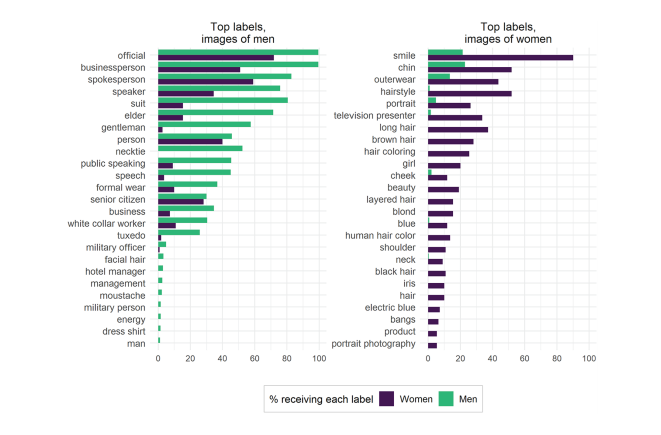

In addition, when looking at what labels are given to images with men and images with women, there, for example by Google Cloud Vision, clearly different labels are given.

Images of women are much more often labeled based on appearance characteristics, while images of men are labeled on business characteristics.

This can also be seen in the image.

In addition, women are recognized less at all in images than men.

Voice Recognition

Voice AI, or speech recognition is an increasingly common method.

Google has its own widely used model for this purpose.

Google itself has said that their speech recognition has an accuracy of 95%.

However, it turns out that this accuracy is not the same for every group.

For men, the tool in question appears to be 13% more accurate than for women.

It also works better for different dialects and accents than for others[5].

If it were examined how well speech recognition works for each group, targeted improvements could also be made.

So this also starts with the data put into it; if a diverse group is involved, there is a better picture of accuracy.

Natural Language Processing and Word Embeddings

Gender bias can also occur in Natural Language Processing.

This occurs, for example, when using word embeddings[7].

Word embeddings are trained on the co-occurrence of words in text corpora.

For example, in such a model trained on Google News data, it turns out that the distance between the words “nurse” and “woman”, is a lot smaller than the distance between the words “nurse” and “man”.

This indicates gender bias.

There are for machine-learning models so-called ‘debias’ methods, which reduce the bias.

For example, the word ‘nurse’ as in the previous example, can be moved more toward the middle between ‘man’ and ‘woman’.

It is also often possible to reduce the magnification of bias that some machine-learning models cause.

Still, in this case, prevention is better than cure; after all, the cause is already in the data being put into the algorithm.

In addition, these debias methods can only be applied when the bias has actually been noticed by the designer.

Only when you as a data scientist are aware of the bias that is in the data can you do something about it.

Gender bias is not the only bias that occurs in such algorithms.

Any algorithm is highly subject to the assumptions underlying it, and gender bias is therefore only an illustration of the importance of the data that is put into an algorithm.

Conclusion

So gender data bias plays a big role and clearly has implications in practice.

But the first steps have been taken.

Addressing the problem first requires recognition of the problem.

It seems that awareness is increasing and this issue is becoming more visible.

So, it is the “person” behind the algorithm that is of great importance to the outcome of the algorithm.

So, more women in data science is a good start anyway!

- A. Linder & M. Y. Svensson (2019) Road safety: the average male as a norm in vehicle occupant crash safety assessment, Interdisciplinary Science Reviews, 44:2, 140-153, DOI: 10.1080/03080188.2019.1603870

- https://www.theguardian.com/lifeandstyle/2019/feb/23/truth-world-built-for-men-car-crashes

- https://www.bbc.com/news/technology-45809919

- Schwemmer, C., Knight, C., Bello-Pardo, E. D., Oklobdzija, S., Schoonvelde, M., & Lockhart, J. W. (2020).

Diagnosing gender bias in image recognition systems. Socius, 6, 2378023120967171 - Tatman, R. (2017).

Gender and Dialect Bias in Youtube;s Automatic Captions. - Hamberg, K. (2008).

Gender bias in medicine. Women’s health, 4(3), 237-243. - Bolukbasi, T., Chang, K. W., Zou, J. Y., Saligrama, V., & Kalai, A. T. (2016).

Man is to computer programmer as woman is to homemaker?

Debiasing word embeddings. Advances in neural information processing systems, 29, 4349-4357.