Large language models (LLMs) like ChatGPT are popular. Not only for personal use, but also within organizations. Searching business documents for specific information, summarizing large amounts of text, or processing customer data more efficiently are some applications of these models that we can easily see the value of.

But can you just use LLMs within your organization? What are the costs? And what happens to your data? LLMs carry risks, but that depends on many factors. An in-buyer, of course, is which LLM you choose and the configuration. It depends on the LLM and the configuration. In this blog, we’ll tell you what LLM types there are, how to use your data securely with this technology and provide overview so you can choose the right LLM for your organization.

LLM risks

Before we get into the different types of LLMs, let’s discuss two risks we hear a lot from different organizations. We often receive questions from organizations about data confidentiality (does storage and use meet GDPR requirements?) and harmful/incorrect output.

- Confidentiality of data

Negligent data protection can have costly consequences. One consequence is that sensitive company data is no longer stored within its own walls, and it can even become viewable by third parties. In 2023, we have seen this negligence lead to data breaches at several companies, including Samsung. As a result, crucial trade secrets were leaked to OpenAI’s ChatGPT. Not only did this cause trade secrets to be out on the street, but also the European Privacy Regulation, GDPR (General Data Protection Regulation) was not complied with.

- Harmful/incorrect output

Unintentional harmful text generation can be a risk when using LLMs. Models may, through training data, unconsciously incorporate biases and stereotypes. This can lead to the creation of texts that are offensive, discriminatory or even hateful. In addition to ethical concerns, harmful output can also have legal consequences. Companies can be held liable for damages resulting from texts they generate or distribute.

These risks can be challenging, but there is a solution for everything.

Different types of LLMs

ChatGPT

ChatGPT is the online version of OpenAI’s LLM models. It’s super convenient because its user-friendly interface means you don’t have to be a techie to use the LLM. If you use ChatGPT without adjusting anything in the settings, you agree that everything you enter will be stored on OpenAI’s server. If you share your trade secrets here then you are in violation of GDPR rules. Since the data is stored in America (OpenAI) instead of Europe. The cost of using ChatGPT varies based on how you choose to use it. You have the option to pay on a monthly subscription basis or on a per-use basis. The specific rate depends on the type of model you select and how heavily you use it.

OpenAI API

An alternative to using OpenAI models, with more concern for privacy, is direct use of OpenAI’s Application Programming Interface (API). This allows you to talk to ChatGPT directly, without the intervention of a user interface (UI). The UI is more focused on simple interaction, but with the API you have more control, especially useful for organizations that want to incorporate OpenAI models into their own apps. The model that generates the text is the same as ChatGPT, but with your own interface.

As of March 1, 2023, OpenAI’s API has received an upgrade: they now no longer use “prompts” and responses to improve models. That means your documents will stay safely inside, with nothing leaking out. And that’s good news for corporate information confidentiality. For personal data protection, OpenAI offers an important functionality: you can ask OpenAI to modify the normal terms and conditions with their Data Processing Addendum. As with many large U.S. tech companies, you cannot enforce your own data processing agreement and must accept OpenAI’s version. Still, a protective data processing agreement is a key requirement within the GDPR for sharing personal data with “processors” like OpenAI. The payment method through the API is pay-as-you-go. With this payment method, you pay for each call.

Azure OpenAI service

This Microsoft service uses a hosting platform. An all-in-one environment where you can store, manage and access applications, while the entire infrastructure is taken care of for you. Many organizations are already using Microsoft Azure Cloud platform. And if you look at the Azure OpenAI service, you can expect the same security standards as with other Microsoft Azure services. So yes, you’re using the same models here as ChatGPT, but you’re managing them through that hosting platform.

With the Azure OpenAI Service, you have more control over the texts generated by OpenAI models. By default, responses are temporarily stored in the same region as the resource for up to 30 days. This data is used for debugging and investigating any misuse of the service. If you want, you can indicate to Microsoft that you prefer not to have the responses stored. When it comes to data protection, you can rely on the standard data processing agreement, including standard contractual clauses, provided by Azure. Again, the payment method is pay-as-you-go.

Databricks

Databricks is also a hosting platform where you can set up and manage LLMs. Here you have a lot of options to choose from, not only from OpenAI, but also other specific models. It’s a versatile environment where you can fine-tune your LLM precisely, integrate with data pipelines, and handle large deployments efficiently. That makes it perfect for production environments that require high performance. It is more technical than Azure, though, so you do need knowledge of data science and data engineering. This makes it less user-friendly for the non-technical user. Databricks also has a comprehensive adequate data security platform, and is GDPR compliant. Costs for using LLMs through Databricks are generally higher than the alternatives. Again, you pay in the pay-as-you-go manner.

Open-source model/own LLM

Here, other models form the basis of the LLM. You can choose from a wide variety of open-source models, for example, based on size. Using a local LLM instead of an API-based (closed-source) variant gives you more control. With a local LLM, all data is processed within your own organization, which reduces the risk of data breaches. You are then in control of security because you can manage access to the model. That helps minimize security risks and create a tailored approach. So you can more easily comply with GDPR regulations because you have direct control over how data is handled and stored.

This LLM application takes the most time to implement because you have to set up the entire infrastructure. If you want to use an open-source LLM, you need to host it on your own infrastructure (on-premises) with powerful GPUs and sufficient storage space. It is important to look at and invest in this carefully to avoid your application underperforming or slow. Also, unlike the closed models mentioned above, you have to take more responsibility for the output yourself with open source models. Both an advantage and a disadvantage. You have to pay attention not only to the accuracy of the answers, but also to possible offensiveness. You can do this by applying various LLM techniques that act as guardrails. Of course, with the other LLMs, you must also always pay attention to accuracy and keep thinking for yourself.

Cost

Without looking carefully at all the possibilities, the costs can quickly skyrocket. Of course, this is not the intention, as it will quickly jeopardize the profitability and feasibility of your project. But don’t worry, with a careful approach you can get the most out of your investment!

The cost of taking LLMs depends on several factors:

- Model selection: If you choose more advanced models with more capabilities, these models are often more expensive. But they are then also trained on more data or can process more data as input.*

- Use intensity: The frequency and extent of use of the LLM affect the cost. But consider how much benefit you can get from those hours of use.

- Infrastructure: The cost of the infrastructure needed to host and manage the LLM. You are investing in the optimal performance and reliability of your LLM.

Different funding methods are available for different LLM implementations:

- Subscriptions: Monthly or annual payments for unlimited use.

- Pay-as-you-go: Pay per token or API call generated. Usually you pay per 1,000 tokens, which is roughly 750 words.**

- On-premise: Implementation of the LLM on your own infrastructure, which requires an initial investment.

We have multiple applications of LLMs, each with their own characteristics. Depending on the needs, we can find the perfect match that meets the criteria.

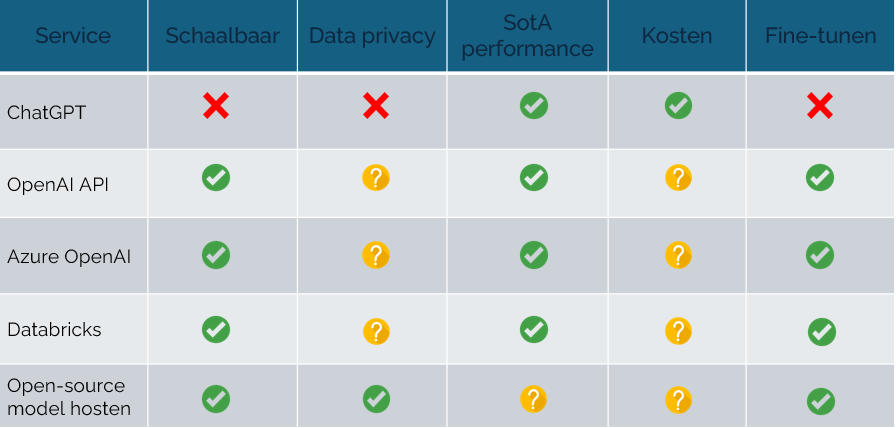

Characteristics per service

? Depends on settings and degree of use

Scalable: Scalability means that LLMs can respond flexibly to a variety of situations and tasks. They are not limited to just what they have already learned, but can also adapt to new data and circumstances. This makes them super convenient and versatile for all kinds of specific applications.

Data privacy: Data privacy is about how well an LLM protects the privacy of user data. It is about ensuring that personal information is kept, processed and shared securely, all according to the rules of privacy laws.

SotA performance: “State-of-the-Art”(SotA) means that the model is currently considered the best available in terms of accuracy, relevance and overall performance.

Cost: This refers to the costs involved in using an LLM, including infrastructure, production, maintenance and any licenses.

Fine-tuning: Fine-tuning means that you adapt the LLM to specific requirements, data or tasks to improve performance for a particular usage scenario. For example, you can add specific domain knowledge. Fine-tuning makes the model even more suitable for particular applications.

Conclusion

LLMs allow organizations to become more efficient and innovative. The choice of an LLM depends on the needs of your organization. In many cases, the Microsoft Azure OpenAI service offers an excellent solution. It is user-friendly and ensures sufficient data security, especially for organizations already using Azure. For more technical modifications to your LLM, Databricks is a good alternative, where data security is also guaranteed. If you want to maintain complete control over data processing, hosting an open-source LLM on your own infrastructure may be a good choice. That way you have total control over the use of your LLM, your data and its output.

In short, with the right approach, LLMs can be a valuable addition to any organization. Would you like specific advice for your organization? If so, contact us and we’ll be happy to help.

* To get an indication of current prices per token/word/character please refer to this website with a handy calculation tool: https://docsbot.ai/tools/gpt-openai-api-pricing-calculator

** To get an indication of LLM performance, please refer to this website: https://huggingface.co/spaces/lmsys/chatbot-arena-leaderboard