[vc_row type=”in_container” full_screen_row_position=”middle” scene_position=”center” text_color=”dark” text_align=”left” top_padding=”45″ overlay_strength=”0.3″ shape_divider_position=”bottom” bg_image_animation=”none” shape_type=””]

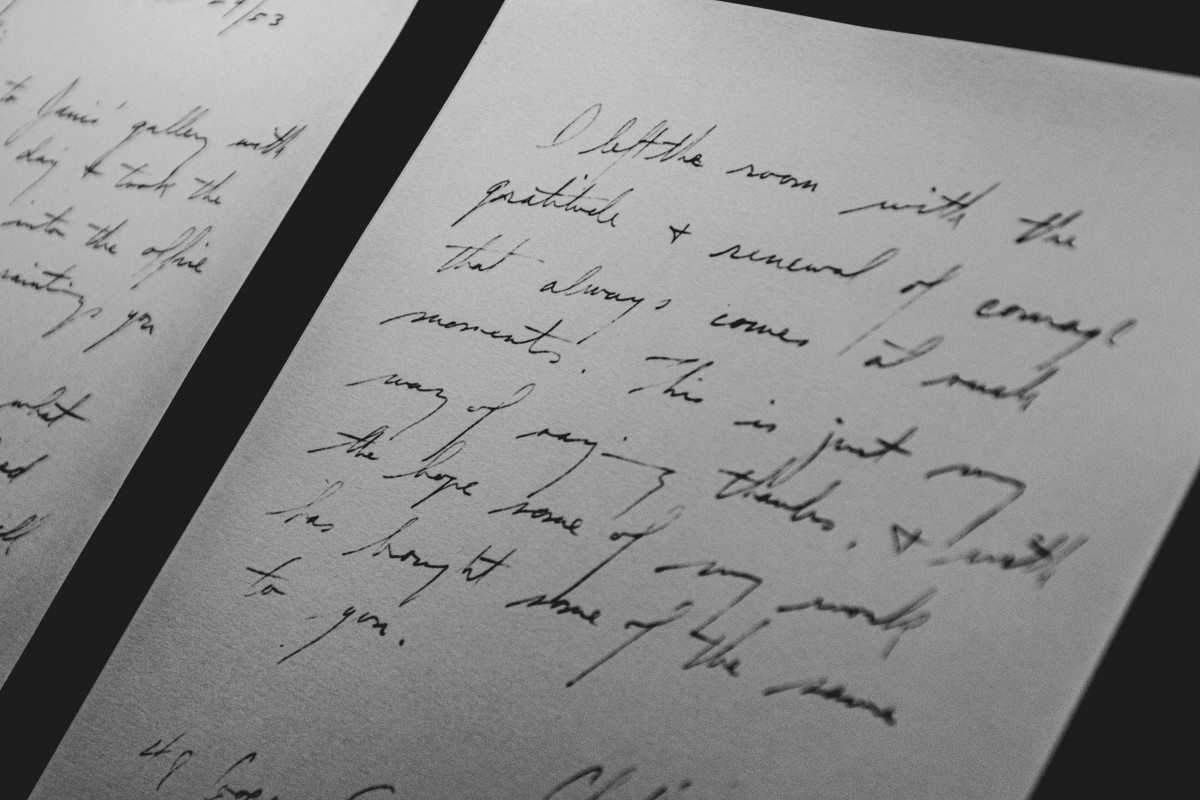

Think of books, letters, documents or newspapers.

The advantage of digitization is firstly preservation; paper decays and bytes do not.

A second advantage is that digitized data can be made very easily accessible.

For example, today we have digital access to 200-year-old Dutch political documents[1], 400 years of newspapers[2],500-year-old books.

Even the popularity of words is currently accessible to us[3].

This data is not only interesting, it is also a goldmine for historians, linguists and other scientists.

Digitization makes this data much easier to use.

Instead of searching through a physical archive, hundreds of relevant documents can be retrieved at the click of a button.

Digitization of printed data is done by scanning documents.

The scans are then converted into a text file using Optical Character Recognition (OCR).

This makes it possible, for example, to copy text or use a search term to find relevant content.

Like most algorithms and models, OCR is not 100 percent correct in recognizing and extracting text; letters are sometimes substituted or spaces added unnecessarily.

This problem worsens when the quality of the scanned paper is poor (a problem more common with old documents).

Disadvantages of low-quality text recognition are obvious.

First, the pieces will be difficult to read.

The reader may read over small errors or determine the correct word based on context.

It’s not ideal.

A bigger problem arises when text files are used by a search engine.

Incorrectly scanned words may prevent potential matches from being found, reducing the usefulness of the dataset.

[/vc_column_inner][/vc_row_inner][image_with_animation image_url=”5626″ alignment=”” animation=”Fade In” border_radius=”10px” box_shadow=”small_depth” max_width=”100%”][vc_custom_heading text=”Example of a difficult document to scan; text is dense and ink is faded in places.” font_container=”tag:p|font_size:12|text_align:left|color:%23a0a0a0″ use_theme_fonts=”yes” css=”.vc_custom_1620637040858{margin-bottom: 3% !important;}”]

These enhancements can be done manually, semi-automatically or automatically.

Digitization states general

The goal of this project was to develop an automatic algorithm that uses “word embeddings” to recognize and correct errors.

The algorithm tries to find potential incorrect variants for each word that appears in the documents.

The incorrect variants are stored along with the input word in a database.

This can be compared to a digital dictionary.

Once each word has been handled by the algorithm, this dictionary can be used to look up the correct “translation” for each incorrect word to correct the errors.

This algorithm would be given a large dataset of Dutch parliamentary documents as input.

These documents have been made available online under the name Staten-Generaal Digitaal[4] in a large digitization project by the Royal Library.

The dataset includes over 2.4 million scanned and OCR-processed pages of parliamentary documentation.

The Staten-Generaal Digitaal documents contain almost 200 years of Dutch political history – all parliamentary acts from 1815 to 1995 can be found in this dataset.

The major drawback is that these documents were printed on poor-quality paper, so the overall quality of the data deteriorated rapidly.

This means that many errors occurred during the OCR process that are now present in the digital text of the documents.

[image_with_animation image_url=”5627″ alignment=”” animation=”Fade In” border_radius=”10px” box_shadow=”small_depth” max_width=”100%”][vc_custom_heading text=”Example of an OCR scanning error in the wild: the computer thinks it says “WD,” but on paper it says “VVD.” font_container=”tag:p|font_size:12|text_align:left|color:%23a0a0a0″ use_theme_fonts=”yes” css=”.vc_custom_1620719328845{margin-bottom: 3% !important;}”]

For example, the first few years of data are both Dutch and French; between 1815 and 1839 this was the official language within the Netherlands.

In addition to the multilingualism of the data set, the Dutch language has changed over the years.

A risk here is that a model may start to see an old spelling as a wrong spelling.

The presence of two languages and old and modern spellings in the dataset can cause noise in the word embeddings. To minimize the impact of this problem, we chose to make the dataset smaller.

Ultimately, only documents from 1945 to 1995 were used.

By omitting data from previous years, the current dataset covers only 50 years of data, yet these are still 1.2 million pages consisting of 1.1 billion words.

After filtering the dataset, it is used to train the word embeddings .

The word embeddings of this dataset are then used in the algorithm to find potential errors.

Word embeddings

Word embeddings concerns a technique for representing words in a high-dimensional vector space.

In this vector space, words with similar meanings are intended to be close together.

The assumption of this project was that a word and its incorrectly scanned variants are close to each other in the vector space.

The embeddings are trained on the context a word appears in.

This context is the same for a word and the mis-scanned variants, so one can expect the embeddings to also be similar and close to each other in on axes.

It can be seen below that this assumption is correct; around the word “minister” there are – among other related words – also mis-scanned variants of that word.

[image_with_animation image_url=”5630″ alignment=”” animation=”Fade In” border_radius=”none” box_shadow=”none” max_width=”100%”][vc_custom_heading text=”Dimensionality reduction on a subset of the embeddings shows that words and their mis-scanned variants are close together.” font_container=”tag:p|font_size:12|text_align:left|color:%23a0a0a0″ use_theme_fonts=”yes” css=”.vc_custom_1620719337646{margin-bottom: 3% !important;}”]

This similarity makes it possible to express the similarity of two embeddings in a number.

Word2vec[5] – the technique used to train embeddings – uses cosine similarity[6] to calculate the similarity between embeddings.

This similarity score can have a value between -1 and 1, where a similarity of 1 means that two embeddings are the same, and a similarity of -1 means that the embeddings are opposite of each other.

These similarity scores make it possible to recognize words that are similar in context.

So this can be used to recognize scanning errors; these scanning errors have an embedding that is very similar to the embedding of the correct word.

But just a similarity between embeddings is not enough; for example, the word “secretary of state” would be recognized as a scanning error of “minister” because the embeddings are similar.

In addition to embedding similarity, another degree of similarity is needed to recognize an incorrect variant of a word.

Edit distance

Just looking at how close embeddings are to each other is not enough to identify potential OCR errors.

The preceding image shows that correct words are also close to “minister.

Broadly speaking, you want the algorithm to only improve words that are similar in lettering and structure to the word given as input.

For example, you don’t want to improve ‘secretary of state’ to ‘minister,’ these words are completely dissimilar.

In contrast, the words ‘minisier’ or ‘ministor’ are very similar to ‘minister,’ you do want the algorithm to improve these.

One way to express this ‘physical’ similarity of words in a number is the Levenshtein or edit distance[7].

The edit distance algorithm calculates how many edits are needed to change word A to word B.

For example, an edit is adding or removing a letter, or replacing one letter with another.

A low edit distance means the words are similar.

For example, “minisier” and “ministor” are only one edit away from “minister,” while “secretary of state” is 13 edits away from it.

The algorithm

Now that the two techniques – which serve to calculate word similarity – have been defined, it is possible to create the algorithm.

Before a word goes through the algorithm, a top 100 most similar words, the “candidates,” are first found through the embedding model.

This initial group of candidates is then filtered through a maximum edit distance.

The edit distance between the input word and the candidates must be a maximum of 2 if the input word is 6 letters or longer.

If the input word is shorter than 6 letters then the maximum edit score is 1.

This is done because two edits can change a lot with short words; the algorithm needs to be a little more precise here.

The candidates are then filtered by setting a minimum limit on the cosine similarity between the input and the candidates.

After these two filters, a group remains that the algorithm sees as potential false variants of the input.

In addition to the filters on word similarity, a few heuristics are used to optimize the quality of the improvements.

For example, numbers are not handled because there is no way to determine, based on context, whether the number is correct or not.

Also, the assumption is made that the correct variant of a word always occurs most often; if a “potential error” recognized by the algorithm occurs more often than the input word then it is not included in the dictionary.

An overview of how the algorithm works is shown in the following figure.

[image_with_animation image_url=”5628″ alignment=”” animation=”Fade In” border_radius=”10px” box_shadow=”small_depth” max_width=”100%”][vc_custom_heading text=”Visual overview of the algorithm.” font_container=”tag:p|font_size:12|text_align:left|color:%23a0a0a0″ use_theme_fonts=”yes” css=”.vc_custom_1620719343281{margin-bottom: 3% !important;}”]

These pairs are evaluated through an evaluation set that accompanies the data set.

This evaluation set contains automatically generated error-correction pairs.

Because this set is also automatically generated, there are also errors in it. It turns out that about 10% of the pairs of the algorithm were correct, but were not in the evaluation set.

This was reflected in the final scores of the algorithm.

Results

The evaluation of the algorithm was calculated in two ways.

First, the performance of the algorithm was expressed in terms of the usual precision, recall, and F1 scores.

These scores were calculated per cosine similarity limit, in order to get a feel for what the best value is; a high or low limit.

These scores were calculated at type level, that is, each error-correcting pair counts 1 time, even if the words occur more than 1 time in the text.

The scores can be seen below.

Here it can be seen that a cosine similarity boundary for this dataset is best set to 0.65.

[image_with_animation image_url=”5672″ alignment=”” animation=”Fade In” border_radius=”10px” box_shadow=”small_depth” max_width=”100%”][vc_custom_heading text=”Performance scores per cosine similarity boundary.” font_container=”tag:p|font_size:12|text_align:left|color:%23a0a0a0″ use_theme_fonts=”yes” css=”.vc_custom_1620719347964{margin-bottom: 3% !important;}”]

By running the dataset against a Dutch word list, we know that there are about 23.2 million incorrect-or nonexistent-words in the texts.

By also including word frequencies in the precision score, we can calculate how many errors are corrected by the automatic algorithm; these are 11.1 million.

So it can be said that this algorithm automatically corrects just under 50% of the errors.

Improvements

Although these results are good, there is still plenty of room for improvement.

For example, a conscious decision was made not to use existing word lists or other external data.

This study was a proof-of-concept to see how far one can get with just the given dataset and a smart algorithm.

Using an external glossary would improve a lot, though; it occurred that a recognized error was a correct word.

An example would be “on the one hand” and “on the other hand.

These words mean almost the same thing, so the embeddings are similar and they are 2 edits apart.

This kind of error of the algorithm can be easily avoided.

Another improvement can be found in the technique of training the word embeddings.

Nowadays, word2vec has been overtaken by smarter and better algorithms that would generate higher quality embeddings.

These better embeddings, along with external data, could cause the number of improved errors to go up.

[vc_row type=”in_container” full_screen_row_position=”middle” scene_position=”center” text_color=”dark” text_align=”left” top_padding=”2%” id=”bronnen” overlay_strength=”0.3″ shape_divider_position=”bottom” bg_image_animation=”none” shape_type=””]

[vc_row type=”full_width_background” full_screen_row_position=”middle” equal_height=”yes” content_placement=”middle” scene_position=”center” text_color=”dark” text_align=”left” top_padding=”5%” overlay_strength=”0.3″ shape_divider_position=”bottom” bg_image_animation=”none” shape_type=””]

Questions?

[/nectar_highlighted_text][/vc_column_inner][/vc_row_inner]