Since the rise of ChatGPT no one can ignore it anymore: ‘Large Language Models‘* (LLMs) are here to stay‘. Several technology companies have advanced LLMs developed. Knowne vear examples are ChatGPT from OpenAI, LLaMA Of Meta and laMDA from Google. Nu I hear your thinking, “Very interesting all this, but what am I supposed to do with it myself?

In practice, finding a suitable business case for the applying of LLM solutions for many organizations and entrepreneurs is a major challenge. In this blog leggen we explain why your LLM‘s want to start using it for knowledge sharing within your organization. We highlight the challenges surrounding the phenomenon knowledge management to and the potential role of LLMs.

What is Knowledge management?

Knowledge management is seen as storing, organizing, managing and sharing knowledge within an organization. Het bears in contribute greatly to the success of an organization: wann when knowledge is not easily accessible, can this destructive its for a organization, because valuable time is spent searching for information rather than on core activities. The success of knowledge management depends not only on people, but also on processes and technology. Knowledge management systems have been developed to streamline processes around this phenomenon. Yet the reality is that many organizations still face many challenges in this area. Some common challenges include:

- Shattered knowledge silos

Do you recognize this? Different departments in your organization each have their own tools and methods for sharing data (Think SharePoint, Slack or Google Drive). Useful within teams, but frustrating for organization-wide collaboration. Without clear rules for organizing documents, finding information becomes like searching for a needle in a haystack.

- Poorly accessible information

As your organization grows and collects more information, it becomes increasingly challenging for employees to find what they need. This becomes especially problematic if your knowledge system is based on directory structures, as in SharePoint.

- Lack of adoption among employees

Lack of ease of use leaves employees behind in the adoption of knowledge systems. This leads to loss of valuable knowledge and limits the company’s ability to learn and adapt quickly.

LLMs to the rescue (?)

LLMs are also called generative models and are designed to generate artificial content based on given input. Even though traditional LLMs powerful , they have limitations. Zo they require big data to be trained, have them high computing power needed and can they do not answer questions from underlying context on which they are not “pre-trained. In practice, this means latest That a general LLM (such as ChatGPT) Probably cannot correctly answer specific questions about your business data. Such an LLM is namely trained on dates of the Internet. It therefore has only knowledge about that which public information can be found on the Internet. It therefore makes little sense to ChatGPT a question about your sales over the past year .. Je is then simply referred to your own finance department.

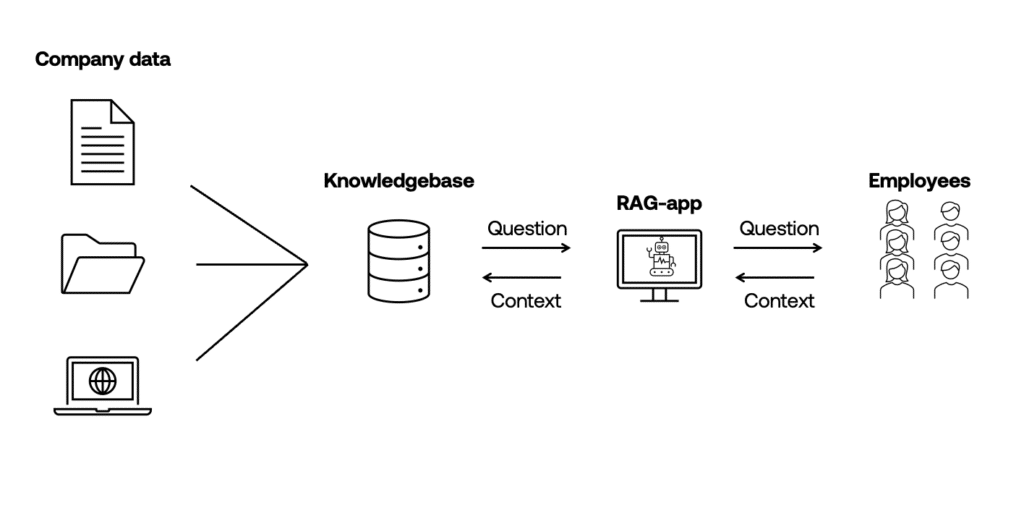

Fortunately, in the world of AI, there is always a solution. In this case, it is (don’t be alarmed): ‘Retrieval Augmented Generation,’ or RAG. To dissect for a moment: ‘Retrieval’ stands for ‘retrieving,’ ‘Augmented’ for ‘adding,’ and ‘Generation’ for ‘producing something new.’ Retrieval models are not new. They are designed to retrieve relevant information from a given knowledge base. They often use semantic search techniques to find and rank the most relevant information based on a query. Only, retrieval models lack the capacity to generate creative or new content. RAG responds to this, combining the generative power of traditional LLMs with the specificity of retrieval models. For your organizational data, RAG could work as follows:

- Based on a question about your organization, a retrieval model retrieves relevant context from your own knowledge base

- The question is answered, based on the context retrieved, by an LLM (including the source of the context retrieved)

- By separately linking separate knowledge systems to the RAG model, the problem of fragmented knowledge silos. Instead, a single knowledge portal now exists where employees can obtain organizational information.

- The problem of poorly accessible information by deep and complicated directory structures disappears with a simplified search method. Employees need only enter a question or search query through an interactive interface. This makes it easier for everyone to get relevant information, even as the organization grows and collects more data. This contributes to more efficient information retrieval.

- Through more user-friendly interaction with knowledge management systems, the lack of adoption among employees decreases.

Conclusion

It’s an exciting time for organizations looking to capitalize on the power of LLMs

The emergence of these new technologies, with specific focus on Retrieval Augmented Generation, offers interesting opportunities for addressing challenges within knowledge management.