Implementing MLOps

The state of data science

Data Science is a fairly young field and still developing at most organizations. Some organizations are further along than others, but in general there is a definite trend:

Phase 1: the Lab

It starts with a Lab: a tinkering shed for alchemists who use complex algorithms to turn data into gold (or at least we think so).

Phase 2: in production

A serious business case was finally found and, a few months later, there is actually a prediction model that works well. Then admittedly another 9 months of code to rewrite, discussions with IT and architects(target landscape, supported tooling, OTAP, security) passed before it was finally in production, but good: it stands, it runs and it works! Hooray!

Until suddenly it no longer works. The predictions are not good, users want something else, or it turns out the process broke down a month ago. But who is going to do this? Jantje, who had once built the model, is long gone. Pietje can’t make sense of his undocumented notebooks and, after several attempts at repair, concludes that he’d better rebuild it “from scratch .

Stage 3: maturity

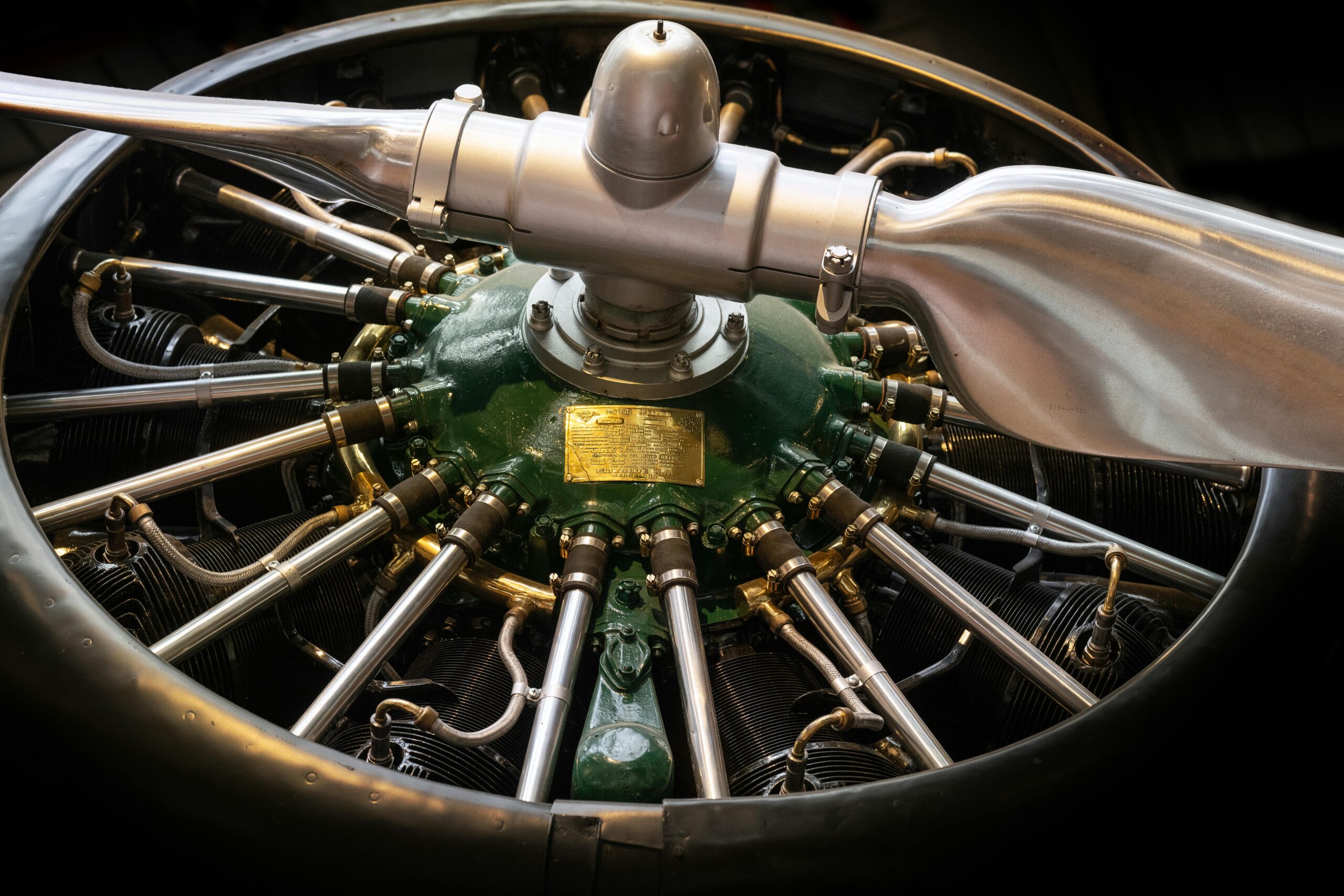

Having become wiser through trial and error, everything now runs like a well-oiled machine. Whether it’s retrieving, reading and sharing data, managing various model experiments, automating deploying AI-driven APIs and apps with CI/CD, declaring predictions… Every team member does it with the push of a button and a smile. Right: so this is science fiction.

MLOps to the rescue

Summarizing the above scenario a little more formally:

- Phase 1: Experiment

- Data science operates in isolation from Business and IT

- Few concrete results

- No real value delivered

- Phase 2: Live corridor

- Output is put into production

- But management, monitoring, reproducibility, security, etc. not yet set up

- First value is delivered, but is not sustainable

- Stage 3: Maturity

- Processes are effective, efficient, reproducible and safe

- Value is continuously delivered

And that’s where MLOps comes in: it’s a way to get into Stage 3.

What is MLOps?

“With Machine Learning Model Operationalization Management (MLOps), we want to provide an end-to-end machine learning development process to design, build and manage reproducible, testable, and evolvable ML-powered software.”

⎼ CDF sig-MLOps

“the extension of the DevOps methodology to include Machine Learning and Data Science assets as first class citizens within the DevOps ecology”

⎼ also CDF sig-MLOps

“MLOps empowers data scientists and app developers to help bring ML models to production. MLOps enables you to track / version / audit / certify / re-use every asset in your ML lifecycle and provides orchestration services to streamline managing this lifecycle.”

⎼ Microsoft

In summary, you could say MLOps is DevOps but for machine learning / data science.

- At DS/ML/AI, we also end up developing software

- So we can apply DevOps

- But DS/ML/AI encompasses more than software development: think datasets, models, training results

- So an extension to DevOps is needed: MLOps

The goal of MLOps is:

Our data science processes are

- Effective

- Demonstrably effective

- Efficient (fast)

- Reproducible

- Safe

- Robust/reliable

- Easy

Ok sounds good, a new magic formula… So if you call MLOps all data science problems magically disappear, just like agile was going to solve all business problems before?

What is MLOps not?

Everything is bad –> We introduce MLOps –> All is well.

No magic spell, then.

- There are best practices, but…

- You need to set up your own MLOps for your own processes

- tailored to your own needs and priorities

But now specifically

Now a little less high level. What kind of processes are we talking about?

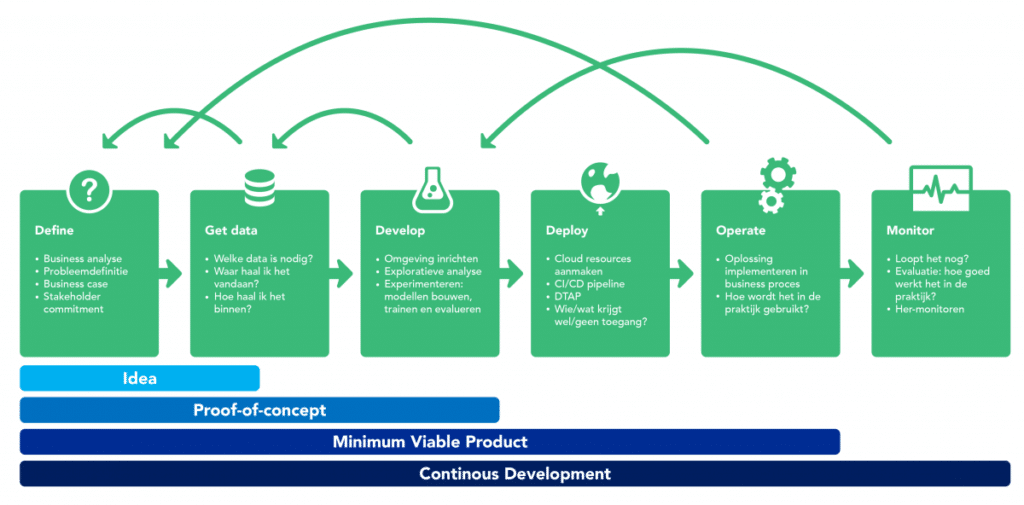

Data science life cycle

- Data version control

How do we keep a grip on (creating and training models with) different datasets? - Experiment management

How do we keep a grip on the many modeling sequences (dataset + model type + variant + hyperparameters + score)? - Deployment & CI/CD

How do we put models live in api’s or apps and update them quickly? - Explanation

How can we understand and explain model predictions? - Monitoring

How can we set up feedback loops monitor operation in practice?

Case study 1

- Pete retrieves data with an SQL query

- Pete forwards a copy to Marietje

- Pietje & Marietje will train models on the dataset

- Pete improves his query and continues with a new data set

- Marietje is still working with the old set

- Marietje did a transformation in a notebook and replaced her dataset

- Developed models are now derived from 3 different datasets

Solution 1: Dataset fixes neatly incorporated into the code to create a unified workflow.

Solution 2: Data version control (for when multiple datasets are really needed)

Case Study 2

Pete’s workflow for training and evaluating a new model is as follows:

- Download dataset and place in folder

- py turn

- In train.py run all code from model 2 except all plots

- In eval.py modify line with model filename and save results to new file

This method scores poorly on reproducibility, reliability and scalability.

Solution: Standardized scripts + experiment management tool to log training results.

Tooling

Are there tools for MLOps? Sure. In fact, they are popping up like mushrooms. Some tools are broad and offer functionality for (virtually) the entire life cycle. Other tools focus on one or more specific components. Some of the collection:

- Full circle

- Data version control

- Experiment management

- …

How to get started with MLOps?

Geez, more tools… As if it wasn’t complicated enough. Do we need to get into that already? Yes and no.

On the one hand: Yes, if you want to be effective, you have to invest in professionalization.

On the other hand: no, don’t just go bringing in extra tools with solutions that are not relevant to you. Moreover, much can be solved with smarter processes and using tools you may already have in place (git, Docker, template scripts).

There is no one right MLOps setup. The right setup is geared to your own situation: parts that are relevant to you and bottlenecks that concern you. You don’t do the setup all at once, but in steps. Apply an agile mindset to your MLOps setup:

- set your priorities

- solve one thing first, then move on to the next

- evaluate and adjust

A few more general tips and principles:

- Production mindset from the start

- Be set up from the beginning for how it goes into production, then you don’t have to rebuild anything

- Good templates are half the battle

- datascience-as-code

- Everything must be uniformly reproducible (i.e. scriptable): train models, create cloud resources, set up Python environment, preprocessing, deployment

- Be pragmatic

- Think big, but start small

- Focus on what is relevant to you